Cracking the machine learning interview: System design approaches

Table Of Content

The authors also presented evidence that it was cost-effective and detailed implementation lessons learned drawn from the qualitative data collected for the study. A specific CPOE function that demonstrated statistically significant improvement in 2022 was automatic deprescribing of medication orders and communication of the relevant information to pharmacies. Deprescribing is the planned and supervised process of dose reduction or stopping of a medication that is no longer beneficial or could be causing harm. That study showed an immediate and sustained 78% increase in successful discontinuations after implementation of the software. It should be noted, however, that the systems were not perfect and that a small percentage of medications were unintentionally cancelled. Finally, an algorithm to detect patients in need of follow-up after test results was developed and implemented in another study.

Private network policies for Amazon OpenSearch Serverless

The algorithm showed some process improvements, but outcome measures were not reported. When moving data between components in a distributed system, it’s possible that the data fetched from a node may arrive corrupted. This corruption occurs because of faults in storage devices, networks, software, etc. When a system is storing data, it computes a checksum of the data and stores the checksum with the data.

The Cloud and System Design

What Do Machine Learning Engineers Do? - Dataconomy

What Do Machine Learning Engineers Do?.

Posted: Tue, 02 May 2023 07:00:00 GMT [source]

Microservices are ideal if you want to develop a more scalable application. With microservices, it’s much easier to scale your applications because of their modern capabilities and modules. If you work with a large or growing organization, microservices are great for your team because they’re easier to scale and customize over time. To learn more about microservices and their benefits, drawbacks, technology stacks, and more, check out this microservices architecture tutorial. Real-time observability is essential for monitoring the model’s behavior in real-world scenarios. Model monitoring pipelines are crucial for tracking the performance of the deployed model.

Distributed system design patterns

System failures usually result in the loss of the contents of the primary memory, but the secondary memory remains safe. Whenever there’s a system failure, the processor fails to perform the execution, and the system may reboot or freeze. Database indexing allows you to make it faster and easier to search through your tables and find the rows or columns that you want. Indexes can be created using one or more columns of a database table, providing the basis for both rapid random lookups and efficient access of ordered information. While indexes dramatically speed up data retrieval, they typically slow down data insertion and updates because of their size. SQL joins allow us to access information from two or more tables at once.

How I Approached Machine Learning Interviews at FAANGs as an ML Engineer - hackernoon.com

How I Approached Machine Learning Interviews at FAANGs as an ML Engineer.

Posted: Wed, 01 Feb 2023 08:00:00 GMT [source]

The study found about 90% accuracy in alerts across two sites but a wide difference in the frequency of appropriate action between the sites (83% and 47%). This suggests that contextual factors at each site, such as culture and organizational processes, may impact success as much as the technology itself. Generative artificial intelligence (AI) has gained significant momentum with organizations actively exploring its potential applications. As successful proof-of-concepts transition into production, organizations are increasingly in need of enterprise scalable solutions.

What kind of people are best suited for studying Machine Design?

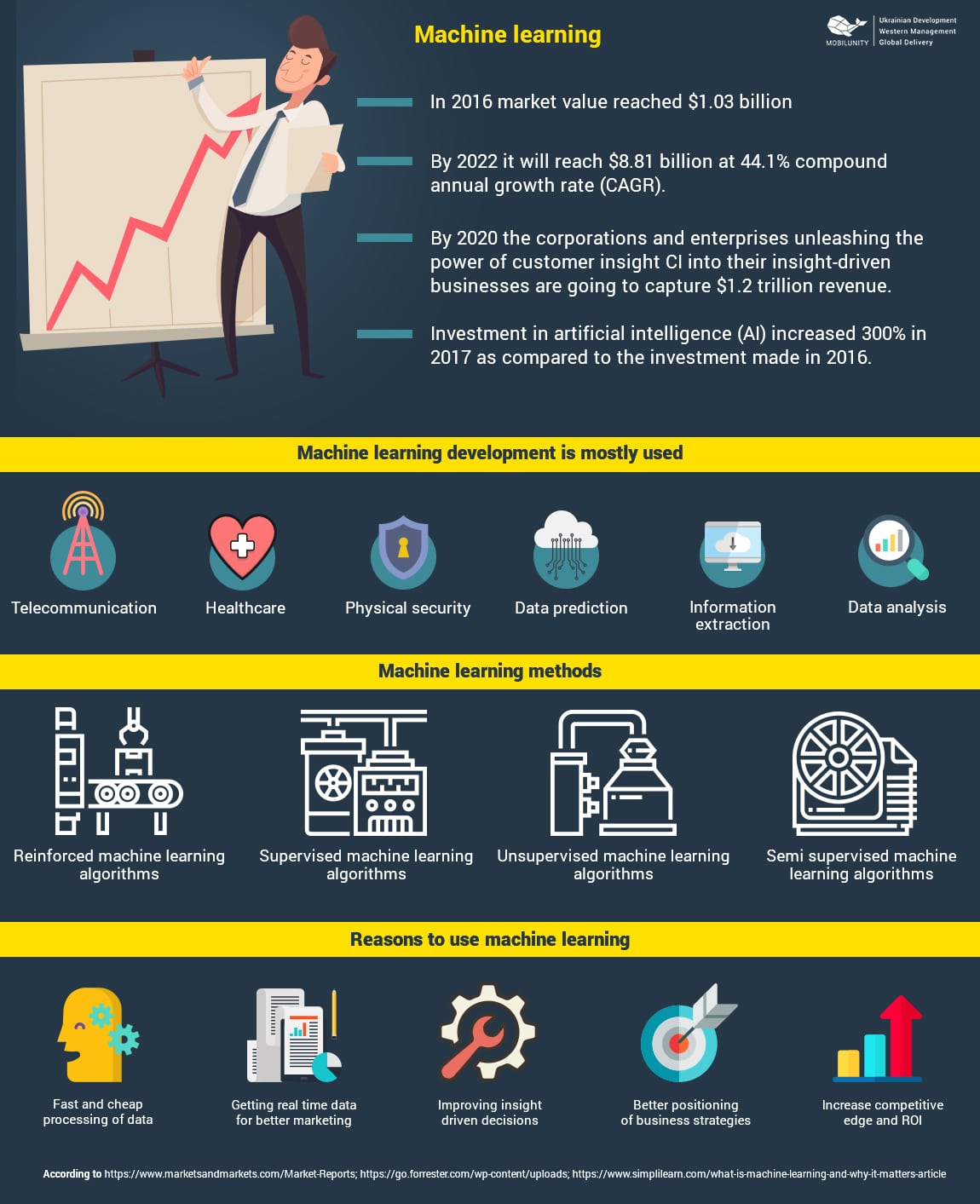

System design for machine learning refers to the process of designing the architecture and infrastructure necessary to support the development and deployment of machine learning models. It involves designing the overall system that incorporates data collection, preprocessing, model training, evaluation, and inference. Machine learning systems design is the process of defining the software architecture, infrastructure, algorithms, and data for a machine learning system to satisfy specified requirements. You’ll walk step-by-step through solving these problems, focusing in particular on how to design machine learning systems rather than just answering trivia-style questions. Once you’re done with the course, you’ll be able to not just ace the machine learning interview at any tech company, but impress them with your ability to think about systems at a high level. If you have a machine learning or system design interview coming up, you’ll find the course tremendously valuable.

We’re generally open to auditing requests by all Stanford affiliates. External requests will be determined on a case by case basis, mostly because the course is hosted on Canvas and we’re not sure how non-Stanford affiliates access Canvas. Convert intuitive ideas to concrete features — normalization, smoothing, and bucketing.

console.error("Unknown deployment environment, defaulting to production");

Training and serving are not skewed– feature generation code for both training and inference should be the same. Model specs are unit tested-It is important to unit test model algorithm correctness and model API service through random inputs to detect any error in code/response. The data pipeline has appropriate privacy controls – e.g. personally identifiable information (PII) should be properly handled, because any leakage of this data may lead to legal consequences.

Online prediction

Some examples of these offline metrics are AUC, F1, R², MSE, Intersection over Union, etc. Model issues, such as performance degradation, bias, and fairness, need to be monitored and addressed. Tracking the model’s performance over time helps identify degradation in accuracy or other metrics, enabling proactive measures like retraining or model updates. Model bias and fairness monitoring involve assessing the model’s predictions for different demographic groups and ensuring equitable outcomes.

Google File System (GFS) is a scalable distributed file system designed for large data-intensive applications, like Gmail or YouTube. GFS is designed for system-to-system interaction, rather than user-to-user interaction. The architecture consists of GFS clusters, which contain a single master and multiple ChunkServers that can be accessed by multiple clients. The deployment technique can vary based on the specific requirements and constraints. Cloud-based deployment allows for flexible and scalable infrastructure, with platforms like AWS, Azure, or GCP offering convenient services for hosting and managing models.

Another set of metrics that would be useful are non-functional metrics. You can mention these to the interviewer to show that you are thinking of all the possible ways to measure the benefit of a model. You should have written those answer down on the whiteboard (or the online platform if you are doing the interview virtually). Please note that depending on the use case some questions may not be relevant, so you do not need to ask all the questions. Model governance and versioning are important considerations in the deployment phase. Establishing proper documentation, version control, and tracking of model changes ensure reproducibility, traceability, and compliance with regulatory requirements.

Comments

Post a Comment